The history of personalized learning machines goes back 100 years. Why don't we still have completely digital tutors as good as humans? Here is our summary of the dead ends, challenges, as well as promising paths that we learned while attempting to build such a digital tutor for maths.

You can go only as far as pedagogy allows

In my 20 years in startups, one of the most important lessons I have learned is that what usually kills a product is some unacknowledged equivalent of a physical law that imposes a hard limit on what can be done, no matter how much code and money you throw at it. In EdTech it's pedagogy.

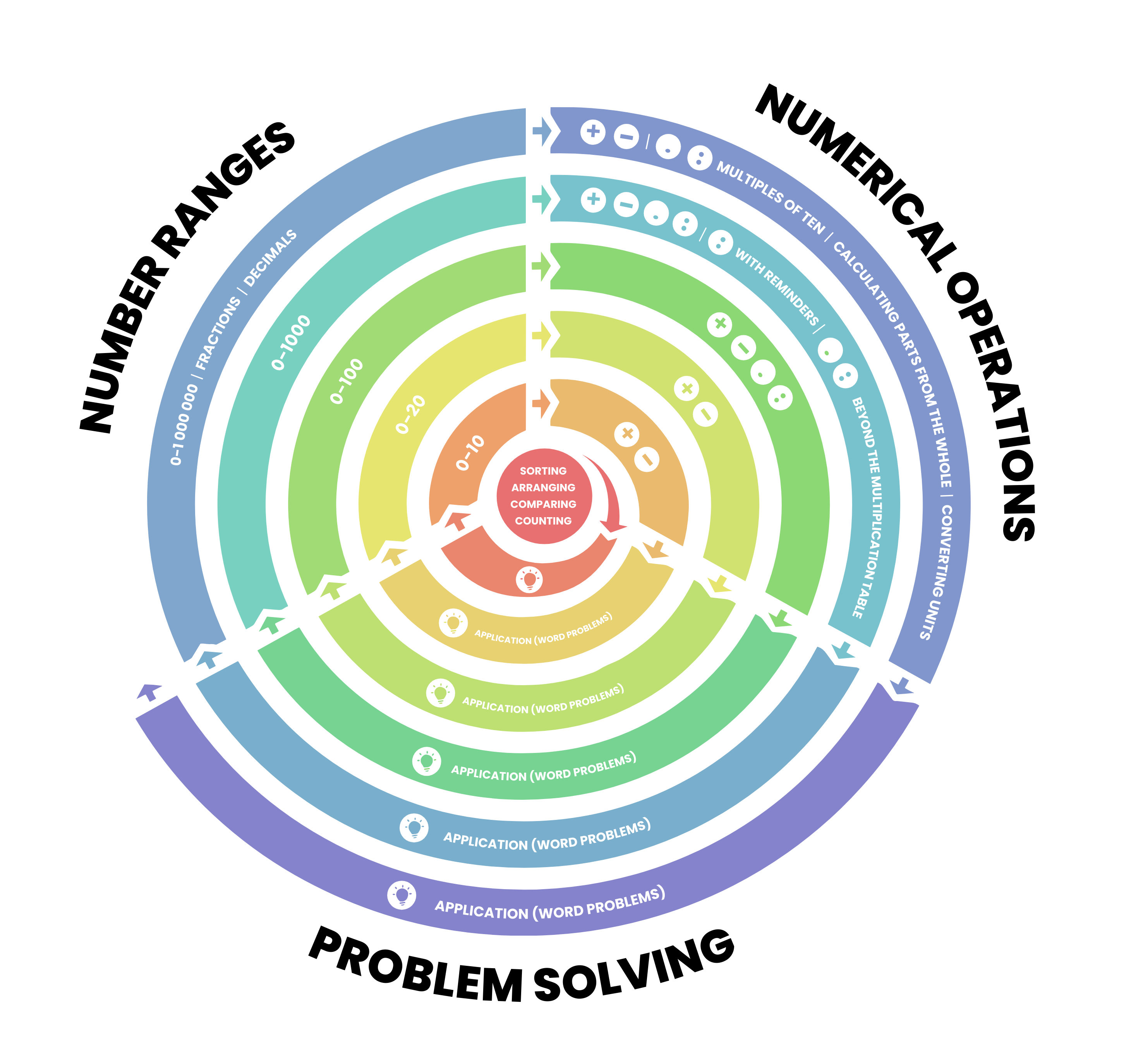

No amount of coding, gamification or marketing is going to save an app built on flawed teaching methods. In this regard, I am fortunate to keep learning from Renata Wolfová , one of Levebee co-founders. She helped us create an evidence-based progression model grounded in her 30+ years of experience with math interventions, during which she has supported over 2000 students in one-on-one sessions. Thanks to that, we were aware from the beginning of the following facts that determine which apps can and cannot genuinely enhance student learning outcomes in maths.

The root causes of knowledge gaps in maths can trace back years, far earlier than the current struggles a student faces. For example, some eighth graders still struggle with basic place value, unable to write "eight hundred sixty-four" numerically, and others find mixed positive and negative criteria confusing, despite these being preschool-level concepts. Covid widened the gaps even more.

Any tutoring (human or digital) that targets only the current problem won't suffice. Children might hide the true root cause through mechanical strategies—like adding 15+2 as 5+2, then placing a 1 before it, without grasping the underlying logic.

In principle, digital tutors that do not cover early maths can never assist low achievers. This is well understood by experienced math interventionists! Regrettably, there are too few of them. For example, very few 8th-grade classroom teachers possess enough experience in preschool curricula.

This is how we came to understand that in order to develop a personalized digital tutor for mathematics, it's essential to examine Tier 3 math interventions (one-on-one sessions). These represent the highest level of personalized instruction available in mathematics.

So that's what we did. We recorded experienced math interventionists, analyzing their interactions with students. After reviewing the videos with the teachers to understand their reasoning, we sought to design algorithms that could mimic these responses in similar situations.

Technology is not there yet

The conclusion of our research is that human math interventions are way more interactive than current technology allows. While speech generation and recognition have improved recently, addressing students' psychological states—like fatigue, low self-esteem, cognitive overload—remains challenging.

Human interactions are also much quicker than those with an app. In our limited dataset, one interaction took only about 2.29 seconds on average. The teacher and student are in constant verbal and non-verbal communication, completing or interrupting each other's sentences, using gestures and facial expressions to convey understanding or confusion instantly, etc. Even the most advanced LLMs cannot operate at this speed. However, it's not just about how quickly a response can be generated. LLMs struggle significantly to understand students' intentions in fragmented sentences and have an even tougher time conveying their own intent to the student in an equally efficient manner.

An app also lacks awareness of the broader surroundings, even in a hypothetical scenario where a camera is always on (I'm skeptical parents would want their kids moving around the home with the camera activated). Obviously, an app is unable to react to external distractions, such as a bird landing on a windowsill. More subtly, it is challenging to consistently identify critical pedagogical cues necessary for determining the next steps, like whether a child is counting on their fingers one by one.

I'm patiently optimistic because, unlike issues stemming from ineffective teaching methods, these problems are theoretically solvable—the technology just hasn't caught up yet. I believe it's useful to identify and articulate these issues in advance so we can be the first to apply emerging technological breakthroughs that might address them.

To give credit where it's due, technology does outperform human teachers in certain ways. For example, an app can offer infinite patience, which can partially compensate for slower interactions and give students more opportunities to solve problems on their own and reach fluency. Interestingly, students take feedback from an app seriously (which required us to fine-tune the tone of the audio feedback), but not as seriously as they would from a person. In general, they're more comfortable with negative feedback from an app and more eager to demonstrate their mastery to humans.

Making humans better at math interventions

Ok, if we can't build a fully digital maths tutor yet, how can we use technology to make humans better at math interventions? At Levebee, we believe we can embed the knowledge of experienced interventionists into an app to make the job easier for them, as well as for the teachers who are just getting started with math interventions.

In other words, the digital tutor should not only tutor the student, but in a way also the teacher. It should improve the teacher's understanding of the student and of mathematical interventions in general.

Purely statistical personalized tutors cannot provide this. Reducing a student to a set of scores doesn't tell teachers what to do. They need good explanations of why the student is struggling in order to work with them beyond the app.

This can only be achieved through a clearly articulated progression model. Based on such a model, Levebee Diagnostic provides teachers with a detailed interpretation of what may be the cause of struggles and what can help the student both in and out of the app.

To be widely used, diagnosis also must be fast. Thanks to the underlying progression model, Levebee Diagnostic adapts quickly to the child, taking on average only 15 minutes. It skips tasks if it knows that further testing would not change the pedagogical recommendations.

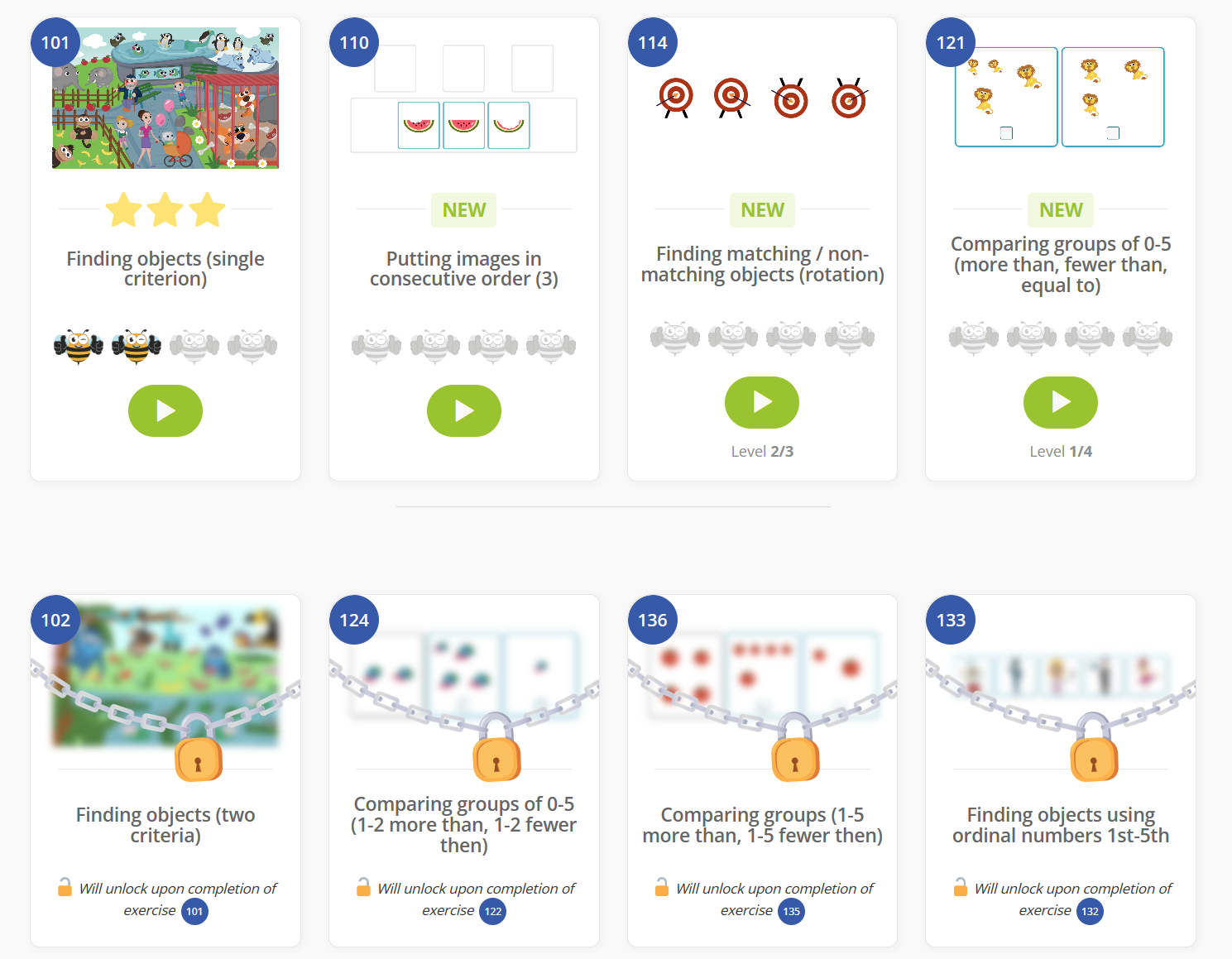

To respect the limited time capacity of teachers, Levebee provides hundreds of interactive exercises that allow students to learn and practice independently as much as technology allows. The exercises are automatically assigned to the student after the diagnostic and keep adapting.

However, contrary to widespread belief about the addictive nature of apps, ensuring independent practice without supervision is challenging. Numerous factors contribute to children disengaging from an app, including accessibility issues for children with special needs and bilingual learners.

But technology can make supervision easier and math interventions more efficient. For example, each exercise in Levebee is accompanied by a detailed pedagogical methodology. It serves as professional development for teachers in small doses, just when they can apply the new knowledge to help a specific student.

The road ahead

We work closely with NGOs such as People in Need that use volunteers to help children in disadvantaged areas. These partnerships allow us to understand what it takes to ensure that anyone who wants to help children with maths can do so, regardless of their own educational background.

Levebee has already earned the trust of 500 schools with annual subscriptions, benefiting 400,000 students in total. We are currently running pilot programs in 15 countries. If you are interested in joining our pilots, please don't hesitate to contact us!

Published on April 2, 2024